AI-Powered Developer Tools Roundup - 2024-07-06

TypeGPT: LLM Interaction from Any Text Field

TypeGPT is a Python application enabling seamless interaction with LLM models from any text field in your operating system using keyboard shortcuts.

Key Features:

- Invoke LLM models (ChatGPT, Google Gemini, Claude, Llama3) from any text input field across your system using simple keyboard shortcuts.

- Clipboard integration for handling larger text inputs and image pasting.

- Screenshot capability to capture and include images in queries.

- Customizable system prompt and model versions to tailor AI behavior and responses.

- Installation via provided shell scripts and execution in the background for seamless operation.

- Flexible usage with commands like /ask, /see, /stop, and model switching options (/chatgpt, /gemini, /claude, /llama3).

Source: https://github.com/olyaiy/TypeGPT

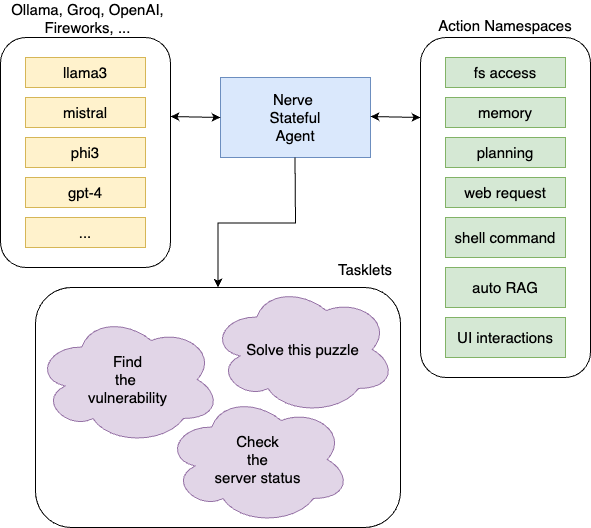

Nerve: Stateful LLM Agents Creator

Nerve is a tool for creating stateful LLM agents capable of autonomous planning and execution of tasks without coding.

Key Features:

- Creates agents that autonomously plan and execute steps to complete user-defined tasks. It dynamically updates the system prompt with new information, making agents stateful across multiple inferences.

- Provides a standard library of actions for agents, including goal identification, plan creation and revision, and memory management.

- Agents are defined using YAML templates, allowing customization for various tasks without coding.

- Integrates with ollama, groq, OpenAI, and Fireworks APIs.

- Runs as a single static binary or Docker container, offering efficiency and memory safety without heavy runtime requirements.

Source: https://github.com/evilsocket/nerve

VisionDroid: Vision-driven Automated Mobile GUI Testing

- A framework that leverages Multimodal Large Language Models (MLLM) to detect non-crash functional bugs in mobile app GUIs.

- It extracts GUI text information, aligns it with screenshots, and uses this vision prompt to enable MLLM to understand GUI context.

- The system employs a function-aware explorer for deeper GUI exploration and a logic-aware bug detector that segments exploration history for bug detection.

- Evaluation on three datasets shows excellent performance compared to 10 baselines, identifying 29 new bugs on Google Play, with 19 confirmed and fixed.

Tools you can use from the paper:

Source: Vision-driven Automated Mobile GUI Testing via Multimodal Large Language Model

LLMs for Code Change Tasks: An Empirical Study

- An evaluation of LLMs with over 1 billion parameters on code review generation, commit message generation, and just-in-time comment update tasks.

- Performance improved with examples, but more examples didn't always yield better results. LoRA-tuned LLMs showed comparable performance to state-of-the-art small pre-trained models.

- Llama 2 and Code Llama families consistently performed best, outperforming small pre-trained models on comment-only changes and performing comparably on other code changes.

Tools you can use from the paper:

No tools mentioned in the paper.

Source: Exploring the Capabilities of LLMs for Code Change Related Tasks