AI-Powered Developer Tools Roundup - 2024-07-02

LLMs for Self-Admitted Technical Debt Identification and Classification

- An empirical study evaluates the effectiveness of LLMs, specifically the Flan-T5 family, for identifying and classifying Self-Admitted Technical Debt (SATD) in software development.

- Fine-tuned LLMs outperform the best existing non-LLM baseline (CNN model) in SATD identification, with a 4.4% to 7.2% improvement in F1 score.

- For SATD classification, the largest fine-tuned model (Flan-T5-XL) performs best, though the CNN model remains competitive.

- Incorporating contextual information improves the performance of larger fine-tuned LLMs in SATD classification tasks.

Tools and artifacts in the paper:

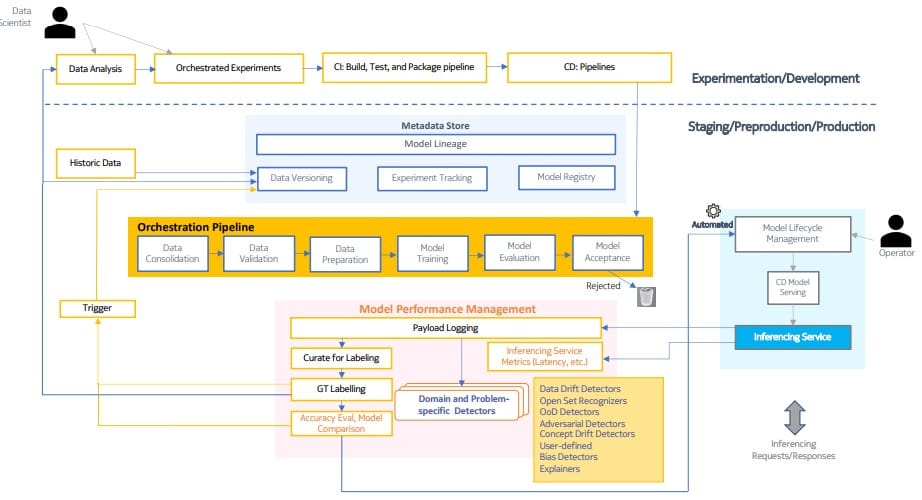

LLM Performance in Automating MLOps Code Adaptation

- A benchmarking study evaluates GPT-3.5-turbo and WizardCoder on automating MLOps functionalities in ML training code.

- The study assesses the models' ability to adapt existing code with MLOps components and translate between different MLOps libraries.

- GPT-3.5-turbo significantly outperforms WizardCoder, achieving higher accuracy in tasks such as model optimization, experiment tracking, and hyperparameter optimization.

Automating Code Adaptation for MLOps -- A Benchmarking Study on LLMs

Execution-Based Evaluation System for NL2Bash

- A machinery for validating Bash scripts generated by LLMs from natural language prompts.

- It compares the execution output of predicted code with the expected system output.

- The system addresses challenges such as semantically equivalent but syntactically different scripts and syntactically correct but semantically incorrect scripts.

- A set of 50 prompts was created to evaluate popular LLMs for NL2Bash tasks.